- Secure Shell (SSH )

- Encapsulating protocols at Network Layer

- Encapsulating protocols at Transport Layer

- Subtle points of using encapsulation

- Protocol Design for an encapsulating protocol

Encapsulation is the process of encasing the payload sent by an endpoint into another protocol’s payload, attaching its own header and trailer. This is applied to all data being processed by the network stack layers. For example, an HTTP ( L7 application layer protocol) is encapsulated under a TCP header (L4 layer protocol ) and further in an IP header ( L3 protocol) and so on until it is down to the physical layer.

This article doesn’t deal with encapsulation across the general network stack but rather focuses on encapsulating protocols that try to mask the identity of the original payload from network middleboxes to provide anonymity or virtualized networking.

Core parts of a secure communication framework relying on encapsulation are

- Encapsulation and decapsulation ( encap and decap) libraries on the receiver and sender as well as a means to exchange the metadata enabling encap-decap.

- The payload, which is an original packet, optionally including upper-layer protocols, can be encrypted or plain.

- Algorithms and tools to order packets arriving out of order and duplicated

- Provide confidentiality as well as detection against malicious activities like MITM attacks or other kinds of passive attacks such as eavesdropping and replaying.

Sequnece Numbers are monotonically increasing numbers that show the ordering of the packets in a stream. They do not necessarily start from 0. Most sequence numbers have a wrapping feature, especially useful for long-lived connections. At the far end of the valid sequence number range, the sequence numbers can go down to the beginning after maxing out. First used in TCP, sequence numbers have become popular in the most reliable communication protocols. Primarily meant for reordering the incoming packets in traffic in the correct order, these numbers can be used for other purposes as well.

- Sequence numbers in the headers, such as ESP, help maintain the anti-replay window, which prevents attacks from replaying previously captured data. Any packet coming from a sequence outside the window is either a retransmission or a replay attack and, hence, can be more scrutinized.

- In some cases of ESP, even extended sequence numbers are used, which can be controlled by the cryptographic algorithm making it a security enhancement.

Security Association between the endpoints of an encapsulated path. Security Association is a critical aspect of securing a communication path with a crypto algorithm, integration, keys, etc, and is used especially in the case of IPsec.

Sharing Keys : Maual keying , static configuration based keying and most recommended, Key exchange protocols such as IKE ( Internet Key exchange) can be used to share secret keys.

Unique identification : The onus of unique identification of the multiple paths/streams and the order of packets in the individual paths/streams lies with the creator of the header that would be attached to the encapsulated payload. Such a unique identifier or set of attributes should be able to distinguish multiple coexisting tunnels. Some example of unique Id/ identifiers in multioplexed usecase in case of non tunneling usecases are

- HTTP/2 uses stream ID for multiplexed flows within a single connection

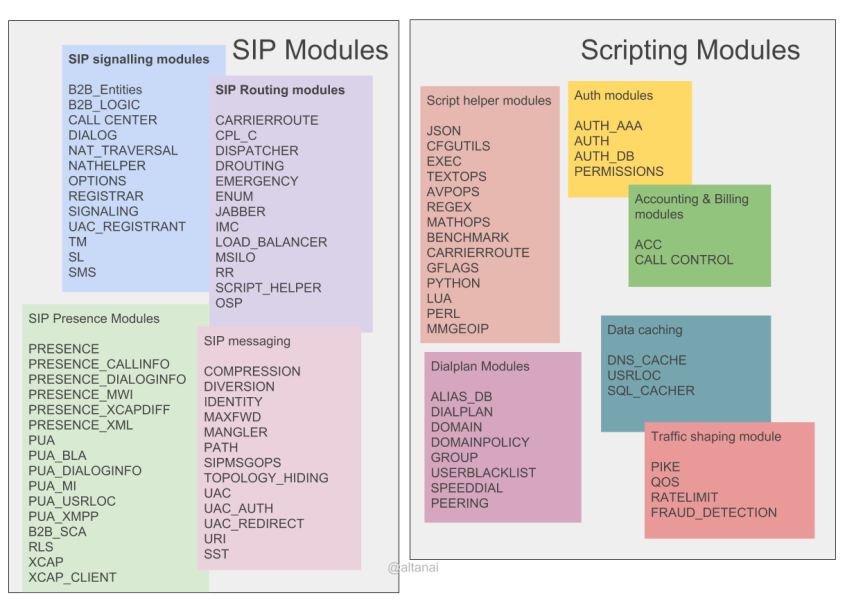

- SIP uses Session ID

Simmilarly example of unique Id/ identifier in case of tunneling are

- GRE uses the key field to make distuiction between individual flows

- MPLS uses labels to identify diferent strems associated with different classes.

- ESP uses SPI to attach the cryptographic SA keys to each packet for processing.

- L2TP ( Layer 2 Tunneling protocol) also uses tunnel ID to identify coexisting tunnels.

- SPI used in IPSec ESP protocol is a 32-bit identifier that bounds a security association to a packet. This is used to demultiplex inbound traffic at the receiver’s end.

- QUIC uses connection ID

Others can rely on sequence numbers as counters or even destination address mappings to identify the path/stream. However, these approaches have many limitations. Most protocols would try to attach a new custom field.

More description on some encapsulating protocols

Secure Shell (SSH )

While ssh is not generally thought of as a tnunneling protocol , it does create a communicaion link for remote access and file transfer to happen securly. Hence forming the crux of what is considered a VPN functionality.

Encapsulating protocols at Network Layer

IP in IP

As IPV4 in IPv4 , IPV4 in IPv6 , IPv6 in IPv4 , and IPv6 in IPv6, are most commonly used for network virtualization ( VPN) and other kinds of Network as service such as Secure Access Service Edge (SASE).

Multiprotocol Label Switching (MPLS)

MPLS can transport IP packets ( IPv4 and IPv6) over IPv4 backbone.Orignally design for forwarding and routing, instead of trraditional IP based routing MPLS uses packet labels to make next hop routing decisions. This enables creation of paths based on QoS. In contrast to ESP which is applied at layer 3, MPLs operated at layer 2.5( between layer 2 and 3).

ESP (Encapsulating Security Payload)

ESP, part of the IPSec suite, enables confidentiality, integrity, and authenticity for IP packets it encapsulates. ESP header contains

- SPI ( Security Parameter Index) to links SA ( security association) with an endpoint

- Sequence number, which is a counter to prevent a replay attack

- payload type can be encrypted or plain

- followed by the Next header, which specifies the type of original IP packet in the payload.

ESP can operate in transport mode (protecting the payload of an IP packet) or tunnel mode (protecting the entire IP packet).

| Generic IP packet |  |

| Transport Mode | Tunnel Mode | |

Authetication Header |  |  |

| ESP |  |  |

Without considering encryption or authentication overhead, the basic ESP header is 8 bytes in size. The ESP H( header) is realitively smaller in transport mode than in tunnel mode.

| IPV4 |  |

| IPv6 |  |

| Transport Mode | Tunnel Model | |

| IPv4 |  |  |

| IPv6 |  |  |

Encapsulating protocols at Transport Layer

TLS and Datagram Transport Layer Security (DTLS)

While TLS is designed for TCP, DTLS securily encapsulates datagrams over UDP. In contrast to ESP which encapsulates traffic for VPN usecases , DTLS is mostly used to encapsulate real time data traffic suhc as in WebRTC, gaming.

Generic Routing Encapsulation (GRE)

Another layer 4 tunneling protocol is GRE. It is protocol agnostic to layer 3 payloads as in it can tunnel any layer 3 protocol from IPv6, IPv4 to other raw formats. The “Protocol Type” fiels in GRE header specifies the protocol type of the encapsulated packet. GRE has a minimal header structure with no out of box security such as encrytion.

QUIC

QUIC encapsulates higher-layer protocols, such as HTTP/3, within its own transport layer over UDP. Besides efficient multiplexing, encryption , QUIC also excels at migration and quick handshakes.

In contrarst to ESP , while ESP is part of IPSec suite of protocol aimed at lower underlay layer tunnleing, QUIC is aimed at application data encapsulation like web traffic and leverages UDP itself , appendning its own header with control information.

Note that MASQUE is another enacpsulation protocol build over QUIC. As these are still nascent and eveolving I will update this section as more specificatiosn are standardised.

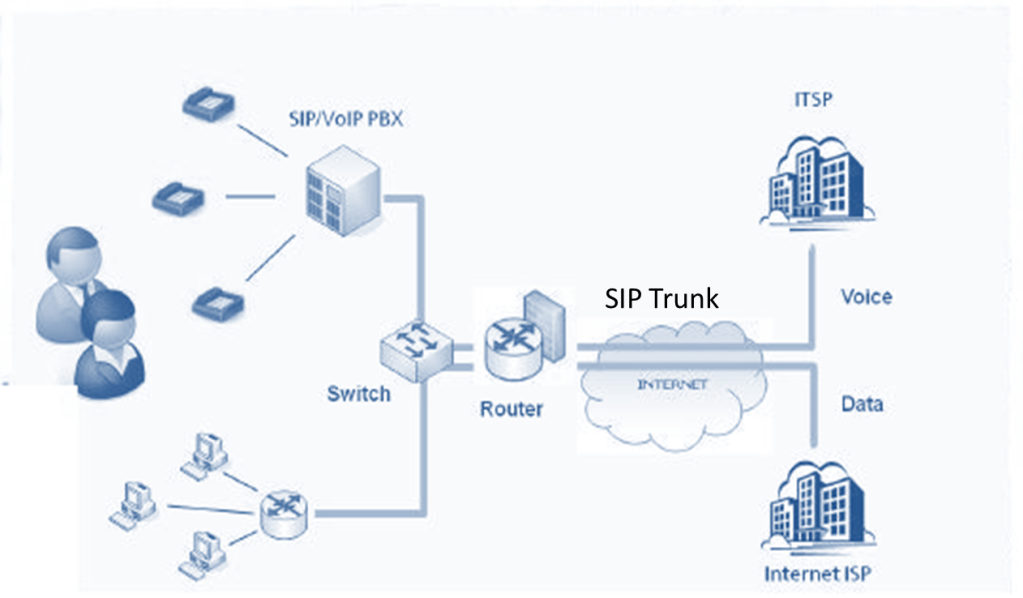

TCP encapsulation

Many network middleboxes that filter traffic on public hotspots block all UDP traffic. As a result, UDP traffic, such as media streams for VoIP calls or even IKEv2 UDP packets, gets blocked. But middleboxes are likely to allow TCP connections through because they appear to be web traffic

- (+) provides NAT support

- (+) Avoids UDP fragmentation

- (-) overhead of TCP or TLS

While designing a TCP-based encapsulation, it is recommended that Initiators should only use TCP encapsulation when traffic over UDP is blocked. TCP can leverage the streams over a single TCP connection to send data across. This way, any firewall or NAT mappings allocated for the TCP connection apply to all of the traffic associated with the encapsulated packet. This prevents large number of roundtrips.

Subtle points of using encapsulation

In addition to encapsulation overhead and reachability, the following are concerns that occur in encapsulating the data and traversing through a complex network.

Path MTU and fragmentation

Path MTU discovery messages such as ICMP can be blanket blocked by firewalls, which prevents proper MTU from being set for the encapsulated and overall packet. Subsequently, the MTU of the endcap packet may exceed the path MTU, leading to fragmentation, leading to

- latency in transmission

- undecipherable or unroutable packets by middleboxes / VPN hubs

- Fragmented packets received may be unprocessable or not be able to be disassembled properly, which is countered by packet loss, leading to retransmission and further congestion.

Migration of inner payload from one to another protocol

For IPv4 encapsulating other IPs in a dual-stack implementation, sometimes the destination can decide to upgrade, such as from IPv4 to IPv6. The upgrade involves periods of simultaneously using IPv4 and IPv6 and then a gradual transition towards IPv6. The key differenece between the IPv4 and IPv6

- header size which is 20 bytes and 40 bytes for IPv4 and IPv6 repectively.

- only IPv4 supports fragmenetation

- IPv4 has nique adddress space thus NAT is not needed as much as with limited addressing of IPv4

With migration ineffect on a dual stack impact on as the overlay depends on the underlay protocols ability to carry its traffic. For example in IPv6 over IPv4, as IPV4 underlays are well adopted in network infrastructure, original payload packets with ipv6 header use the ipv4 as encapsulating packet IP to carry accross the tunnel. BGP and OSPF are common routing and forwrading protocols for a IPv4 underlay network.

| IPv4 | IPv6 | |

| Overlay | IPv4 over IPv6 IPv4 over IPv4 ( GRE) | IPv6 over IPv4 ( 6in4) IPv6 over IPv6 |

Prioritization and congestion post encapsulation

Many network middleboxes ( routers, cloud firewalls, gateways, and so on ) implement traffic filtering, shaping, or queue management based on prioritization( AQM at ISPs). Due to the masked nature of encapsulated packets, there is a high chance of the middleboxes not being able to ascertain their identity and, thus, making it deprioritized.

Misordering

To still keep the endpoints connected, the packets thus need to be put in the correct order with manageable latency at the receiving endpoint. This is especially critical for encapsulating packets for low-latency applications. For example, for video codec, a keyframe encapsulated packets arriving late could affect all subsequent packets to wait in the receiver buffer. This cascaded into a downward spiral of sending selective acknowledgments, retransmission, and discarding arrived packets, which can further intensified the problem.

Loss of ECN signal

Some systems overcome this by copying the ECN header from the inner header of the original packet to the outside headers. However, this needs to be a mutable field as the packet traverses through the many nodes of a large network. This is not protected by the integrator algorithms, and thus, for the risk of being misused, once a feature, this is now dropped by the updated specifications of many encapsulating protocols, such as IPsec.

Classification and tagging

Classification and tagging of traffic within a stream of encapsulated packets is also a challenge. The payload of the encapsulated packets could contain mixed content that cannot be tagged to any specific class, which can be used to prioritize real-time or critical traffic. For example, DSCP tag propagation from inner to outer packets can help in this direction but runs the risk of traffic profiling by middleboxes.

State Synchronization in Multipath

Scaling to involve multiple streams in a session using encapsulation poses challenges. This problem is further amplified in the case of multi-sender and multi-receiver scenarios.

Applying policies

For a stateful, contextual, and intelligent decision-making process, a sender needs to leverage the multiple available paths. It needs to discover alternate reachable paths and collect and sync network metrics to use the resources matching the needs of time sensitivity, cost, etc.

Anti-replay window synchronization

Difficulty in synchronizing anti-replay windows when multiple paths are involved impacts load-sharing encapsulated traffic. Additional issues may involve multicore or distributed operations.

A short-term solution to Sync issues is to have a very large Anti-replay window. Patching this with a short-term change of making the anti-replay window too big increases the possibility of packets being too far in sequence, which further leads to unpredictability in ordering. In high throughput scenarios, it may even be difficult for the CPU to keep the state in cache for an immensely large window size, thus causing undue latency penalties.

Protocol Design for an encapsulating protocol

Traffic Fow confidentiality ( TFC)

Primarily, an encapsulating protocol needs to make the traffic from outside visibility, which can be done by rewriting the source destination information as well as encrypting and/or padding the payload so as to not make it intelligible to middleboxes. Also mentioned in RFC 4303 for ESP in Tunel mode.

Dummy Data : Other means to secure confidentiality could be to use dummy packets or even dummy streams.

Segmentation of information into header and trailer

- Avoid leaking information on the payoad

- enable reassembly in case of fragmentation

Dynamically adjustable anti-replay window sizes

A smaller window is faster to process and secure but inapplicable to multi legged session, while a large window has a performance impact and can jeopardize the security of replays. By implementing a dynamically sized sliding window, the protocol can keep up the instantaneous requirements of the Network, such as keeping the window large for higher packet loss but also compressing the window size when the traffic latency-sensitive and out-of-sequence is intended to be discarded.

- multiple replay windows for multiple paths

- Synchronize sequneces with minial communication between the threads

Security constraints

Enabling multiple child SAs to be linked to a session is one way to overcome both multipath and antireplay issues mentioned above. The multiple children SAs in a parent SA can be thought of as representing a child tunnel inside a parent tunnel as it enables uniquely identifying and maintaining each SA-associated path with SPI-based identification. This leads to no overhead in synchronizing sequence numbers for ordering in case of multipath or multicore. Such concepts have been proposed in a few IETF drafts using different terminologies, such as sub-tunnel, cluster-tunnel, etc

References :