- RTP (Real-time Transport Protocol)

- RTP Session

- RTSP (Real-Time Streaming Protocol)

- SRTP (Secure Real-time Transport Protocol)

- RTP in a VoIP Communication system and Conference streaming

- Mixers, Translators and Monitors

- Multiplexing RTP Sessions

- Session Description Protocol (SDP) Capability Negotiation

- Adaptive bitrate control

RTP is a protocol for delivering media stream end-to-end in real time over an IP network. Its applications include VoIP with SIP/XMPP, push to talk, WebRTC and teleconf, IOT media streaming, audio/video or simulation data, over multicast or unicast network services so on.

RTSP provides stream control features to an RTP stream along with session management.

RTCP is also a companion protocol to RTP, used for feedback and inter-frame synchronization.

- Receiver Reports (RRs) include information about the packet loss, interarrival jitter, and a timestamp allowing computation of the round-trip time between the sender and receiver.

- Sender Reports( SR) include the number of packets and bytes sent, and a pair of timestamps facilitating inter-stream synchronization.

SRTP provides security by end-to-end encryption while SDP provides session negotiation capabilities.

In this article I will be going over RTP and its associated protocols in depth to show the inner workings in a RTP media streaming session.

RTP (Real-time Transport Protocol)

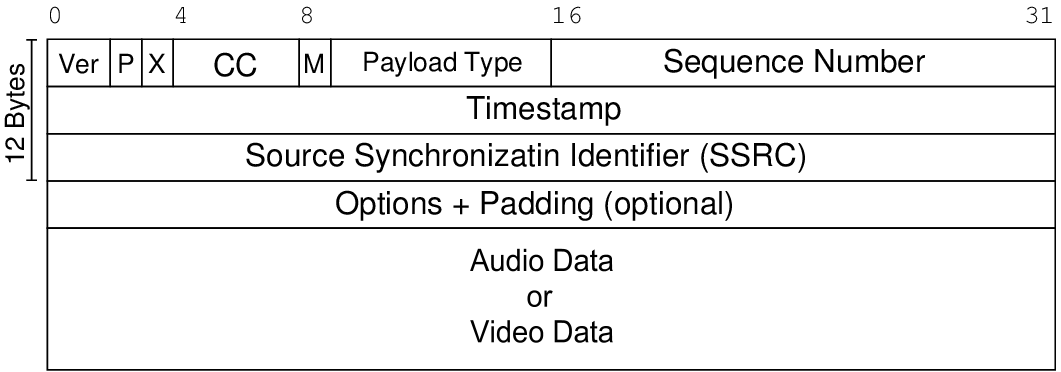

RTP handles realtime multimedia transport between end to end network components . RFC 3550. RTP is extensible in headers format and simplified the application integration ( encryption , padding) and even use of proxies, mixers, translators etc.

RTP is independent of the underlying transport and network layers and can be described as an application layer protocol dealing with IP networks. While RTP was originally adapted from VAT( now obsolete) it was designed to be protocol independent ie it can be used with non-IP protocols like ATM, AAL5 as well as IP protocols IPV4, and IPv6. It does not address resource reservations and does not guarantee the quality of service for real-time services. However, it does provide services like payload type identification, sequence numbering, timestamping and delivery monitoring.

The sequence numbers included in RTP allow the receiver to reconstruct the sender’s packet sequence. Usage : Multimedia Multi particpant conferences, Storage of continuous data, Interactive distributed simulation, active badge, control and measurement applications.

RTP Session

Real-Time Transport Protocol

[Stream setup by SDP (frame 554)]

[Setup frame: 554]

[Setup Method: SDP]

10.. .... = Version: RFC 1889 Version (2)

..0. .... = Padding: False

...0 .... = Extension: False

.... 0000 = Contributing source identifiers count: 0

0... .... = Marker: False

Payload type: ITU-T G.711 PCMU (0)

Sequence number: 39644

[Extended sequence number: 39644]

Timestamp: 2256601824

Synchronization Source identifier: 0x78006c62 (2013293666)

Payload: 7efefefe7efefe7e7efefe7e7efefe7e7efefe7e7efefe7e...

Ordering via Timestamp (TS) and Sequence Number (SN)

- TS ( Timestamp) used to order packets in correct timing order,

- SN ( Sequence Number ) is used to detect packet loss

For a video frame that spans multiple packets – TS is same but SN is different.

Payload

RTP payload type is a 7-bit numeric identifier that identifies a payload format.

Audio

- 0 PCMU

- 1 reserved (previously FS-1016 CELP)

- 2 reserved (previously G721 or G726-32)

- 3 GSM

- 4 G723

- 8 PCMA

- 9 G722

- 12 QCELP

- 13 CN

- 14 MPA

- 15 G728

- 18 G729

- 19 reserved (previously CN)

Video

- 25 CELB

- 26 JPEG

- 28 nv

- 31 H261

- 32 MPV

- 33 MP2T

- 34 H263

- 72-76 reserved

- 77–95 unassigned

- dynamic H263-1998, H263-2000

- dynamic (or profile) H264 AVC, H264 SVC , H265theora , iLBC , PCMA-WB ( G711 a law) , PCMU-WB ( G711 u law)G718, G719, G7221, vorbis , opus , speex , VP8 , VP9, raw , ac3 , eac3,

Note : difference between PCMA ( G711 alaw) and PCMU ( G711 u law)G.711 μ-law tends to give more resolution to higher range signals while G.711 A-law provides more quantization levels at lower signal levels.

Dynamic Payloads

Dynamic payload in RTP A/V Profile , unlike static ones above, are not assigned by IANA. They are assigned by means outside of the RTP profile or protocol specifications.

Tones

- dynamic tone

- telephone event ( DTMF)

These codes were initially specified in RFC 1890, “RTP Profile for Audio and Video Conferences with Minimal Control” (AVP profile), superseded by RFC 3550, and are registered as MIME types in RFC 3555. Now registering static payload types is now considered a deprecated practice in favor of dynamic payload type negotiation.

Session identifiers

SSRC was designed for distinguishing several sources by labelling them differently. In an RTP session, each particpant maintains a full, separate space of SSRC identifiers. The set of participants included in one RTP session consists of those that can receive an SSRC identifier transmitted by any one of the participants either in RTP as the SSRC or a CSRC or in RTCP.

0 1 2 3

0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

|V=2|P|X| CC |M| PT | sequence number |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| timestamp |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| synchronization source (SSRC) identifier |

+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+

| contributing source (CSRC) identifiers |

| .... |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

Synchronization source (SSRC) is a 32-bit numeric SSRC identifier for the source of a stream of RTP packets. This identifier is chosen randomly, with the intent that no two synchronization sources within the same RTP session will have the same SSRC identifier. All packets from a synchronisation source form part of the exact timing and sequence number space, so a receiver groups packets by synchronisation source for playback. The binding of the SSRC identifiers is provided through RTCP. If a participant generates multiple streams in one RTP session, for example from separate video cameras, each MUST be identified as a different SSRC.

Contributing source (CSRC) – A source of a stream of RTP packets that has contributed to the combined stream produced by an RTP mixer. The mixer inserts a list of the SSRC identifiers of the sources, called CSRC list, that contributed to the generation of a particular packet into the RTP header of that packet. An example application is – audio conferencing where a mixer indicates all the talkers whose speech was combined to produce the outgoing packet, allowing the receiver to indicate the current talker, even though all the audio packets contain the same SSRC identifier (that of the mixer).

Timestamp calculation

Timesatmp is picked independantly from other streams in session. It is incemeneted based on packetization interval times the sampling rate. For example

- audio 8000 Hz sampled at 20 ms, has blocks timestamps t1:160, t2:t1+160.. actual sampling may differ sligntly form this nominal rate.

- video clock rate 90 kHz, frame rate 30 f/s would have blocks timestamps t1:3000 , t2:t1+3000.. For 25 fps t1:3600, t2:t1+3600. In some software coders timestamps can also be computer from the system clock such as gettimeofday()

Cross media synchronization using timestamp: RTP timestamp and NTP timestamps form a pair that identify the absolute time of a particular sample in the stream.

UDP provides best-effort delivery of datagrams for point-to-point as well as for multicast communications.

Threading and Queues by RTP stacks

Reception and transmission queues handled are by the RTP stack.

Packet Reception – Application does not directly read packets from sockets but gets them from a reception queue. RTP stack is responsuble for updating this queue.

Packt transmission – Packets are not directly written to sockets but inserted in a transmission queue handled by the stack.

Incoming packet queue takes care of functions such as packet reordering or filtering out duplicate packets.

Threading model – Most libraries uses separate execution thread for each RTP session handling the queues.

RTSP (Real-Time Streaming Protocol)

RTSP is is Streaming Session protocol using RTP. It is also a network control protocol which uses TCP to maintain an end-to-end connection. Session protocols are actually negotiation/session establishment protocols that assist multimedia applications.

Applications : control real-time streaming media applications such as live audio and HD video streaming.

RTSP establishes a media session between RTSP end-points ( can be 2 RTSP media servers too) and initiates RTP streams to deliver the audio and video payload from the RTSP media servers to the clients.

Flow for RTSP stream between client and Server

- Initialize the RTP stack on Server and Client – Can be done by calling the constructor for object and ind initilaizing object with arguments

At Server

Server rtspserver = new Server();

At client

Client rtsplient = new Client();

2. Initiate TCP connection with the client and server respectively (via socket ) for the RTSP session

At Server

ServerSocket listenSocket = new ServerSocket(RTSPport); rtspserver.RTSPsocket = listenSocket.accept(); rtspserver.ClientIPAddr = rtspserver.RTSPsocket.getInetAddress();

At Client

rtspclient.RTSPsocket = new Socket(ServerIPAddr, RTSP_server_port);

3. Set input and output stream filters

RTSPBufferedReader = new BufferedReader(new InputStreamReader(theServer.RTSPsocket.getInputStream()));

RTSPBufferedWriter = new BufferedWriter(new OutputStreamWriter(theServer.RTSPsocket.getOutputStream()));

4. Parse and Reply to RTSP commands

ReadLine from RTSPBufferedReader and parse tokens to get the RTSP request type

request = rtspserver.parse_RTSP_request();

On receiving each request send the appropriate response using RTSPBufferedWriter

rtspserver.send_RTSP_response();

Request Can be either of DESCRIBE, SETUP , PLAY, PAUSe , TEARDOWN

4. TEARDOWN RTSP Command

Either calls destructor which release the resources and end the session or call the BYE explicietly and close sockets

rtspserver.RTSPsocket.close(); rtspserver.RTPsocket.close();

RTP processing

- At Transmitter ( Server) – packetization of the video data into RTP packets.

This involves creating the packet, set the fields in the packet header, and copy the payload (i.e., one video frame) into the packet.

Get next frame to send from the video and build the RTP packet

RTPpacket rtp_packet = new RTPpacket(MJPEG_TYPE, imagenb, imagenb * FRAME_PERIOD, buf, video.getnextframe(buf));

RTP header formation from above accept parameters – PType, SequenceNumber, TimeStamp , buffer byte[] data and data_length of next frame in buffer go in the packet

3. Transmitter – Retrieve the packet bitstream and store it in an array of bytes and send it as Datagram packet over UDP socket

senddp = new DatagramPacket(packet_bits, packet_length, ClientIPAddr, RTP_dest_port);

RTPsocket.send(senddp);

4. At Receiver – construct a new DatagramSocket to received RTP packets, on client’s RTP port

rcvdp = new DatagramPacket(buf, buf.length); RTPsocket.receive(rcvdp);

5. Receiver – RTP packet header and payload retrival

RTPpacket rtp_packet = new RTPpacket(rcvdp.getData(), rcvdp.getLength()); rtp_packet.getsequencenumber(); rtp_packet.getpayload(payload); // payload is bitstreams

6. Decode the payload as image/ video frame / audio segment and send for consumption by player or file or socket etc.

SRTP (Secure Real-time Transport Protocol)

Neither RTP or RTCP provide any flow encryption or authentication means, which is where SRTP comes into picture. SRTP is the security layer which resides between the RTP/RTCP application layer and the transport layer. It provides confidentiality, message authentication, and replay protection for both unicast and multicast RTP and RTCP streams.

Cryptographic context includes includes

- session key used directly in encryption/message authentication

- master key securely exchanged random bit string used to derive session keys

- other working session parameters ( master key lifetime, master key identifier and length, FEC parameters, etc)

it must be maintained by both the sender and receiver of these streams.

“Salting keys” are used to protect against pre-computation and time-memory tradeoff attacks.

To learn more about SRTP specifically visit : https://telecom.altanai.com/2018/03/16/secure-communication-with-rtp-srtp-zrtp-and-dtls/

RTP in a VoIP Communication system and Conference streaming

Simulcast

Client encodes the same audio/video stream twice in different resolutions and bitrates and sending these to a router who then decides who receives which of the streams.

Multicast Audio Conference

Assume obtaining a multicast group address and pair of ports. One port is used for audio data, and the other is used for control (RTCP) packets. The audio conferencing application used by each conference participant sends audio data in small chunks of ms duration. Each chunk of audio data is preceded by an RTP header; RTP header and data are in turn contained in a UDP packet.

The RTP header indicates what type of audio encoding (such as PCM, ADPCM or LPC) is contained in each packet so that senders can change the encoding during a conference, for example, to accommodate a new participant that is connected through a low-bandwidth link or react to indications of network congestion.

Every packet networks, occasionally loses and reorders packets and delays them by variable amounts of time. Thus RTP header contains timing information and a sequence number that allow the receivers to reconstruct the timing produced by the source. The sequence number can also be used by the receiver to estimate how many packets are being lost.

For QoS, each instance of the audio application in the conference periodically multicasts a reception report plus the name of its user on the RTCP(control) port. The reception report indicates how well the current speaker is being received and may be used to control adaptive encodings. In addition to the user name, other identifying information may also be included subject to control bandwidth limits.

A site sends the RTCP BYE packet when it leaves the conference.

Audio and Video Conference

Audio and video media are transmitted as separate RTP sessions, separate RTP and RTCP packets are transmitted for each medium using two different UDP port pairs and/or multicast addresses. There is no direct coupling at the RTP level between the audio and video sessions, except that a user participating in both sessions should use the same distinguished (canonical) name in the RTCP packets for both so that the sessions can be associated.

Synchronized playback of a source’s audio and video is achieved using timing information carried in the RTCP packets

Layered Encodings

In conflicting bandwidth requirements of heterogeneous receivers, Multimedia applications should be able to adjust the transmission rate to match the capacity of the receiver or to adapt to network congestion.

Rate-adaptation should be done by a layered encoding with a layered transmission system.

In the context of RTP over IP multicast, the source can stripe the progressive layers of a hierarchically represented signal across multiple RTP sessions each carried on its own multicast group. Receivers can then adapt to network heterogeneity and control their reception bandwidth by joining only the appropriate subset of the multicast groups.

Mixers, Translators and Monitors

Note that in a VOIP system, where SIP is a signaling protocol , a SIP signalling proxy never participates in the media flow, thus it is media agnostic.

Mixer

An intermediate system that receives RTP packets from one or more sources, possibly changes the data format, combines the packets in some manner and then forwards a new RTP packet.

Example of Mixer for hi-speed to low-speed packet stream conversion . In conference cases where few participants are connected through a low-speed link where other have hi-speed link, instead of forcing lower-bandwidth, reduced-quality audio encoding for all, an RTP-level relay called a mixer may be placed near the low-bandwidth area.

This mixer resynchronises incoming audio packets to reconstruct the constant 20 ms spacing generated by the sender, mixes these reconstructed audio streams into a single stream, translates the audio encoding to a lower-bandwidth one and forwards the lower-bandwidth packet stream across the low-speed links.

All data packets originating from a mixer will be identified as having the mixer as their synchronization source.

RTP header includes a means for mixers to identify the sources that contributed to a mixed packet so that correct talker indication can be provided at the receivers.

Translator

An intermediate system that forwards RTP packets with their synchronization source identifier intact.

Examples of translators include devices that convert encodings without mixing, replicators from multicast to unicast, and application-level filters in firewalls.

Translator for Firewall Limiting IP packet pass

Some of the intended participants in the audio conference may be connected with high bandwidth links but might not be directly reachable via IP multicast, for reasons such as being behind an application-level firewall that will not let any IP packets pass. For these sites, mixing may not be necessary, in which case another type of RTP-level relay called a translator may be used.

Two translators are installed, one on either side of the firewall, with the outside one funneling all multicast packets received through asecure connection to the translator inside the firewall. The translator inside the firewall sends them again as multicast packets to a multicast group restricted to the site’s internal network.

Other cases :

video mixers can scales the images of individual people in separate video streams and composites them into one video stream to simulate a group scene.

Translator usage when connection of a group of hosts speaking only IP/UDP to a group of hosts that understand only ST-II, packet-by-packet encoding translation of video streams from individual sources without resynchronization or mixing.

Monitor

An application that receives RTCP packets sent by participants in an RTP session, in particular the reception reports, and estimates the current quality of service for distribution monitoring, fault diagnosis and long-term statistics.

Layered Encodings

In conflicting bandwidth requirements of heterogeneous receivers, Multimedia applications should be able to adjust the transmission rate to match the capacity of the receiver or to adapt to network congestion. Rate-adaptation should be done by a layered encoding with a layered transmission system.

In the context of RTP over IP multicast, the source can stripe the progressive layers of a hierarchically represented signal across multiple RTP sessions each carried on its own multicast group. Receivers can then adapt to network heterogeneity and control their reception bandwidth by joining only the appropriate subset of the multicast groups.

Multiplexing RTP Sessions

In RTP, multiplexing is provided by the destination transport address (network address and port number) which is different for each RTP session ( seprate for audio and video ). This helps in cases where there is chaneg in encodings , change of clockrates , detection of packet loss suffered and RTCP reporting .

Moreover RTP mixer would not be able to combine interleaved streams of incompatible media into one stream.

Interleaving packets with different RTP media types but using the same SSRC would introduce several problems. But multiplexing multiple related sources of the same medium in one RTP session using different SSRC values is the norm for multicast sessions.

REMB ( Receiver Estimated Maximum Bitrate)

RTCP message used to provide bandwidth estimation in order to avoid creating congestion in the network. Support for this message is negotiated in the Offer/Answer SDP Exchange. Contains total estimated available bitrate on the path to the receiving side of this RTP session (in mantissa + exponent format). REMB is used by

- sender to configure the maximum bitrate of the video encoding.

- notify the available bandwidth in the network and by media servers to limit the amount of bitrate the sender is allowed to send.

In Chrome it is deprecated in favor of the new sender side bandwidth estimation based on RTCP Transport Feedback messages.

Session Description Protocol (SDP) Capability Negotiation

SDP Offer/Answer flow

RTP can carry multiple formats.For each class of application (e.g., audio, video), RTP defines a profile and associated payload formats. Session Description Protocol used to specify the parameters for the sessions.

Usually in voIP systems SDP packets describing a session with codecs , open ports , media formats etc are embedded in a SIP request such as INVITE .

SDP can negotiate use of one out of several possible transport protocols. The offerer uses the expected least-common-denominator (plain RTP) as the actual configuration, and the alternative transport protocols as the potential configurations.

m=audio 53456 RTP/AVP 0 18

a=tcap:1 RTP/SAVPF RTP/SAVP RTP/AVPF

plain RTP (RTP/AVP)

Secure RTP (RTP/SAVP)

RTP with RTCP-based feedback (RTP/AVPF)

Real-time Transport Control Protocol (RTCP)-Based Feedback (RTP/SAVPF)

Adaptive bitrate control

Adapt the audio and video codec bitrates to the available bandwidth, and hence optimize audio & video quality. For video, since resolution is chosen at the start only , encoder use bitrate and frame-rate attributes only during runtime to adapt.

RTCP packet called TMMBR (Temporary Maximum Media Stream Bit Rate Request) is sent to the remote client.

References:

- RFC 3550 – RTP: A Transport Protocol for Real-Time Applications

- RFC 4585 Extended RTP Profile for Real-time Transport Control Protocol (RTCP)-Based Feedback (RTP/AVPF)

- RFC 3711 Secure Real-time Transport Protocol (SRTP)

- Real-Time Transport Protocol (RTP) Parameters , Internet Assigned Numbers Authority (IANA) http://www.iana.org/assignments/rtp-parameters/rtp-parameters.xhtml

- RTSP comm https://www.w3.org/2008/WebVideo/Fragments/wiki/UA_Server_RTSP_Communication

- JMF Java Media Framework https://www.oracle.com/java/technologies/javase/java-media-framework.html