- Point to Point

- Point to Point via Middlebox

- Translator

- Transport/Relay Anchoring

- Transport translator

- Media translator

- Back-To-Back RTP Session

- Point to Point using Multicast

- Any-Source Multicast (ASM)

- Source-Specific Multicast (SSM)

- Point to Multipoint using Mesh

- Point to Multipoint + Translator

- Media Mixing Mixer

- Media Swithing Mixer

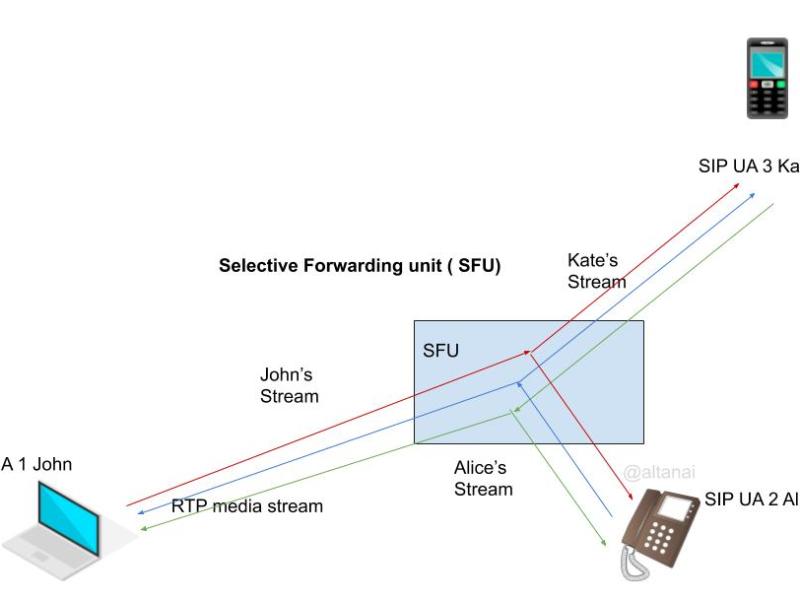

- SFU ( Selective Forwarding Unit)

- Simulcast

- SVC

- Hybrid Topologies

- Hybrid model of forwarding and mixed streamings

- Severless models

- Point to Multipoint Using Video-Switching MCUs

- Cascaded SFUs

- Transport Protocols

- Audio PCAP storage and Privacy constraints for Media Servers

- CDR ( Call Detail Records )

- Signalling PCAPS

- Media Stats

- Audio PCAPS

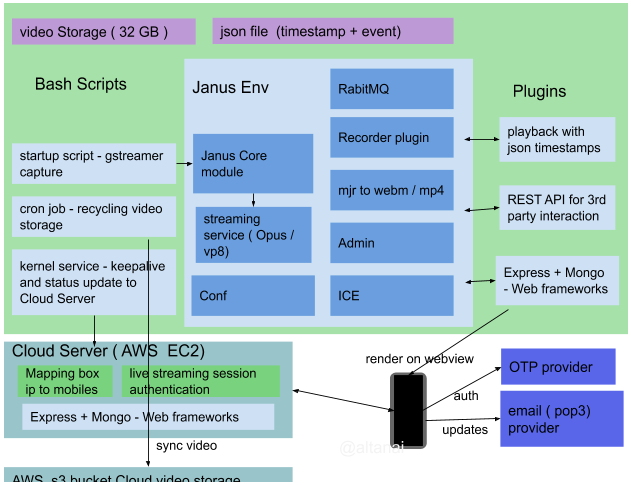

With the sudden onset of Covid-19 and building trend of working-from-home , the demand for building scalable conferncing solution and virtual meeting room has skyrocketed . Here is my advice if you are building a auto- scalable conferencing solution

This article is about media server setup to provide mid to high scale conferencing solution over SIP to various endpoints including SIP softphones , PBXs , Carrier/PSTN and WebRTC.

Point to Point

Endpoints communicating over unicast. RTP and RTCP tarffic is private between sender and reciver even if the endpoints contains multiple SSRC’s in RTP session.

| Advantages of P2p | Disadvantages of p2p |

| (+) Facilitates private communication between the parties | (-) Only limitaion to number of stream between the partcipants are the physical limiations such as bandwidth, num of available ports |

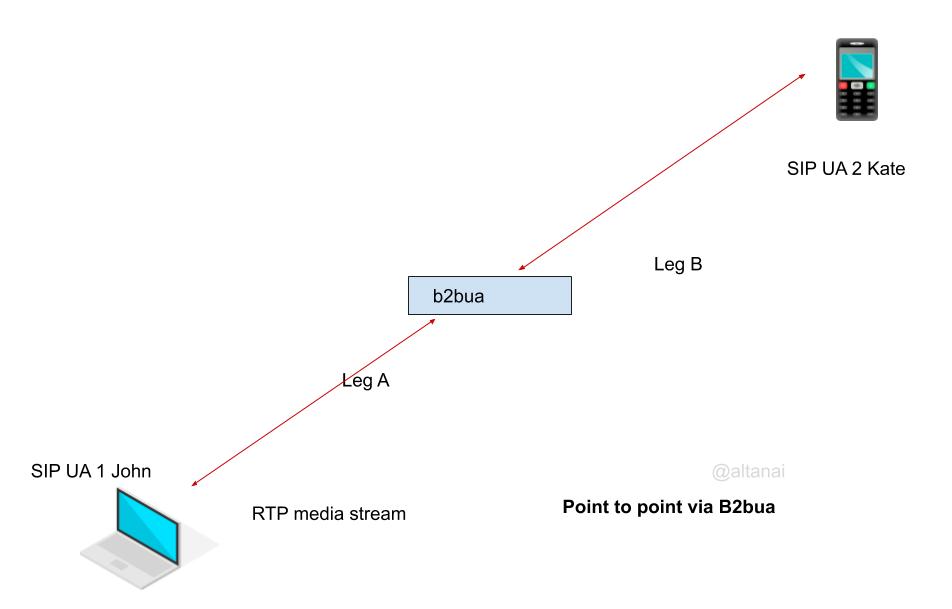

Point to Point via Middlebox

Same as above but with a middle-box involved. Middle Box type are :

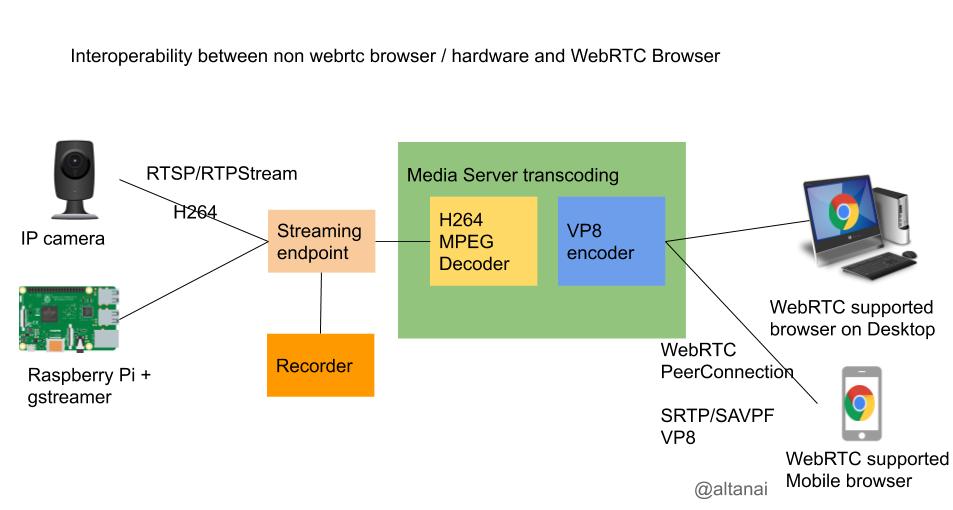

Translator

Mostly used interoperability for non-interoperable endpoints such as transcoding the codecs or transport convertion. This does not use an SSRC of its own and keeps the SSRC for an RTP stream across the translation.

Subtypes of Multibox :

Transport/Relay Anchoring

Roles like NAT traversal by pinning the media path to a public address domain relay or TURN server

Middleboxes for auditing or privacy control of participant’s IP

Other SBC ( Session Border Gateways) like characteristics are also part of this topology setup

Transport translator

interconnecting networks like multicast to unicast

media packetization to allow other media to connect to the session like non-RTP protocols

Media translator

Modifies the media inside of RTP streams commonly known as transcoding.

It can do up to full encoding/decoding of RTP streams. In many cases it can also act on behalf of non-RTP supported endpoints, receiving and responding to feedback reports ad performing FEC ( forward error corrected )

Back-To-Back RTP Session

Mostly like middlebox like translator but establishes separate legs RTP session with the endpoints, bridging the two sessions.

Takes complete responsibility of forwarding the correct RTP payload and maintain the relation between the SSRC and CNAMEs

| Advantages of Back-To-Back RTP Session | Disadvantages of Back-To-Back RTP Session |

| (+) B2BUA / media bridge take responsibility tpo relay and manages congestion | (-) It can be subjected to MIM attack or have a backdoor to eavesdrop on conversations |

Point to Point using Multicast

Any-Source Multicast (ASM)

traffic from any particpant sent to the multicat group address reaches all other partcipants

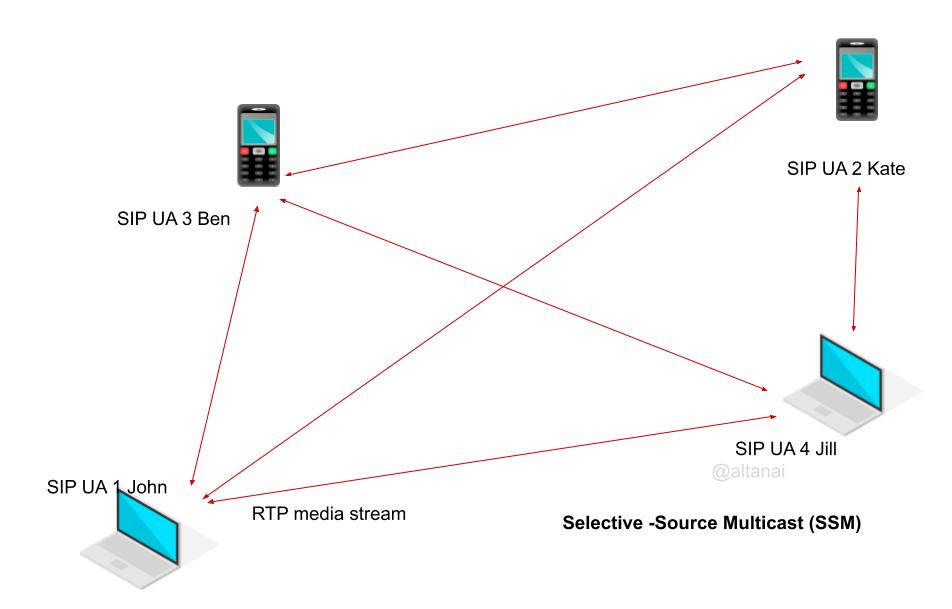

Source-Specific Multicast (SSM)

Selective Sender stream to the multicast group which streams it to the recibers

Point to Multipoint using Mesh

many unicast RTP streams making a mesh

Point to Multipoint + Translator

Some more variants of this topology are Point to Multipoint with Mixer

Media Mixing Mixer

receives RTP streams from several endpoints and selects the stream(s) to be included in a media-domain mix. The selection can be through

static configuration or by dynamic, content-dependent means such as voice activation. The mixer then creates a single outgoing RTP stream from this mix.

Media Switching Mixer

RTP mixer based on media switching avoids the media decoding and encoding operations in the mixer, as it conceptually forwards the encoded media stream.

The Mixer can reduce bitrate or switch between sources like active speakers.

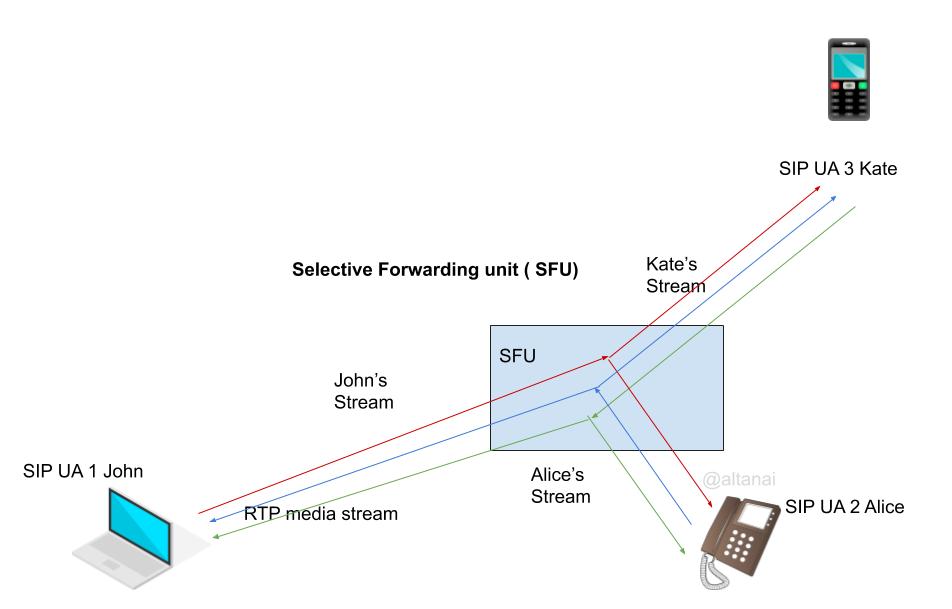

SFU ( Selective Forwarding Unit)

Middlebox can select which of the potential sources ( SSRC) transmitting media will be sent to each of the endpoints. This transmission is set up as an independent RTP Session.

Extensively used in videoconferencing topologies with scalable video coding as well as simulcasting.

| Advantges of SFU | Disadvatages of SFU |

| (+) Low lanetncy and low jitter buffer requirnment by avoiding re enconding (+) saves on encoding decoding CPU utilization at server | (-) unable to manage network and control bitrate (-) creates higher load on receiver when compared with MCU |

On a high level, one can safely assume that given the current average internet bandwidth, for count of peers between 3-6 mesh architectures make sense however any number above it requires centralized media architecture.

Among the centralized media architectures, SFU makes sense for atmost 6-15 people in a conference however is the number of participants exceed that it may need to switch to MCU mode.

Simulcast

Encode in multiple variation and let SFU decide which endpoint should receive which stream type

| Advantages of SFU +Simulcast | Disadvantages of SFU +Simulcast |

| (+) Simulcast can ensure endpoints receive media stream depending on their requirnment/bandwidth/diaply | (-) Uplink bandwidth reuirnment is high (-) CPU intensive for sender for encoding many variations of outgoing stream |

SVC ( scalable Video Coding)

Encodes in multiple layers based on various modalities such as

- Signal to noise ration

- temporal

- Spatial

| Advantages of SFU +Simulcast | Disadvantages of SFU +Simulcast |

| (+) Simulcast can ensure endpoints receive media stream depending on their requirnment/bandwidth/diaply | (-) Uplink bandwidth reuirnment is high (-) CPU intensive for sender for encoding many variations of outgoing stream |

Hybrid Topologies

There are various topologies for multi-endpoint conferences. Hybrid topologies include forward video while mixing audio or auto-switching between the configuration as load increases or decreases or by a paid premium of free plan

Hybrid model of forwarding and mixed streamings

Some endpoints receive forwarded streams while others receive mixed/composited streams.

Serverless models

Centralized topology in which one endpoint serves as an MCU or SFU.

Used by Jitsi and Skype

Point to Multipoint Using Video-Switching MCUs

Much like MCU but unlike MCU can switch the bitrate and resolution stream based on the active speaker, host or presenter, floor control like characteristics.

This setup can embed the characteristics of translator, selector and can even do congestion control based on RTCP

To handle a multipoint conference scenario it acts as a translator forwarding the selected RTP stream under its own SSRC, with the appropriate CSRC values and modifies the RTCP RRs it forwards between the domains

Cascaded SFUs

SFU chained reduces latency while also enabling scalability however takes a toll on server network as well as endpoint resources

Transport Protocols

Before getting into an in-depth discussion of all possible types of Media Architectures in VoIP systems, let us learn about TCP vs UDP.

TCP is a reliable connection-oriented protocol that sends REQ and receives ACK to establish a connection between communicating parties. It sequentially ends packets which can be resent individually when the receiver recognizes out of order packets. It is thus used for session creation due to its errors correction and congestion control features.

Once a session is established it automatically shifts to RTP over UDP. UDP even though not as reliable, not guarantying non-duplication and delivery error correction is used due to its tunnelling methods where packets of other protocols are encapsulated inside of UDP packet. However to provide E2E security other methods for Auth and encryption are used.

Audio PCAP storage and Privacy constraints for Media Servers

A Call session produces various traces for offtime monitoring and analysis which can include

CDR ( Call Detail Records ) – to , from numbers , ring time , answer time , duration etc

Signalling PCAPS – collected usually from SIP application server containing the SIP requests, SDP and responses. It shows the call flow sequences for example, who sent the INVITE and who send the BYE or CANCEL. How many times the call was updated or paused/resumed etc .

Media Stats – jitter , buffer , RTT , MOS for all legs and avg values

Audio PCAPS – this is the recording of the RTP stream and RTCP packets between the parties and requires explicit consent from the customer or user . The VoIP companies complying with GDPR cannot record Audio stream for calls and preserve for any purpose like audit , call quality debugging or an inspection by themselves.

Throwing more light on Audio PCAPS storage, assuming the user provides explicit permission to do so , here is the approach for carrying out the recording and storage operations.

Firther more , strict accesscontrol , encryption and annonymisation of the media packets is necessary to obfuscate details of the call session.

References :

- RFC 7667 RTP Topologies – https://tools.ietf.org/html/rfc7667

To learn about the difference between Media Server tologies

- centralized vs decentralised,

- SFU vs MCU ,

- multicast vs unicast ,

Read – SIP conferecning and Media Bridge

SIP conferencing and Media Bridges

SIP is the most popular signalling protocol in VOIP ecosystem. It is most suited to a caller-callee scenario , yet however supporting scalable conferences on VOIP is a market demand. It is desired that SIP must for multimedia stream but also provide conference control for building communication and collaboration apps for new and customisable solutions.

To read more about buildinga scalable VoIP Server Side architecture and

- Clustering the Servers with common cache for High availiability and prompt failure recovery

- Multitier archietcture ie seprartion between Data/session and Application Server /Engine layer

- Micro service based architecture ie diff between proxies like Load balancer, SBC, Backend services , OSS/BSS etc

- Containerization and Autoscalling

Read – VoIP/ OTT / Telecom Solution startup’s strategy for Building a scalable flexible SIP platform

VoIP/ OTT / Telecom Solution startup’s strategy for building a scalable flexible SIP platform

Scalable and Flexible platform. Let’s go in-depth to discuss how can one go about achieving scalability in SIP platforms. ulti geography Scaled via Universal Router, Cluster SIP telephony Server for High Availability, Multi-tier cluster architecture, Role Abstraction / Micro-Service based architecture, uted Event management and Event Driven architecture , Containerization, autoscaling , security , policies…